There is no one way to do things. One of my favourite quotes about technology that highlights this is from Robert M. Pirsig:

Technology presumes there's just one right way to do things and there never is.

In my current management context (I phrase I’ve adopted via Cem Kaner) I’m the quality control guy for an enterprise program that consists of five projects. Sub-projects, really. Each one of them a commercial-off-the-shelf (COTS) implementation. Each one of them a critical component to long-term technology adoptions plans by the acquiring organization. Each one of them completing the complexity trifecta: people, process, technology.

Checklists to the rescue. Like this one for the sub-project test plans, it’s effectively a functional decomposition of the test activities on a project. The intent is that each sub-project knows what they are doing in each of the following major categories and that each of them also has evidence backing up their claim that they know what they are doing.

- Test Environment Management

- Test Data Management

- Test Management

- Acceptance Management

Remember my context. Enterprise. Commercial-off-the-shelf implementation. We’re not testing the product but the installation/configuration of the product. We’re investigating potential barriers to solution adoption and to realizing the benefits that were planned.

Diving deeper…

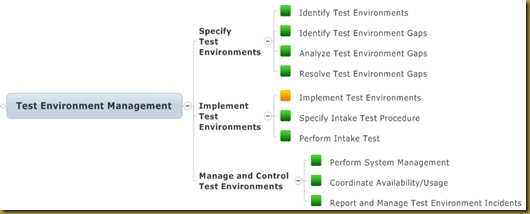

Test Environment Management

BTW the colours are a risk assessment. In the enterprise, shared test environments are more complex to deal with than those you control exclusively. The closer you are to acceptance testing, the more likely you will have a shared environment to work in. Systems are inter-connected nowadays, eh. If the system itself is net new, it’s going to connect to something that isn’t new. And so, there be capability gaps that have to be closed, tested in isolation, then tested as part of regular business operations (end-to-end) testing.

Test Data Management

Again not as big a deal if you control the environment exclusively. If you don’t, and chances are that you don’t if you’re working with an enterprise system, then you have to devise how you are going to work with test data.

Test Management

Many people think of the role of a test manager and start/stop at test management. And that’s one of my major motivations behind building this test plan checklist.

I differentiate “design testing” from “design tests”. Word play, perhaps, but in my mind creating a test strategy and formulating a plan that implements that strategy is designing a system to complete the testing. That’s “designing testing”. Designing tests, on the other hand, is the activity of identifying/crafting and executing tests.

Really important: None of these checklist items demand test scripts/procedures. How much (or how little) you need scripts is part of planning and designing testing.

Acceptance Management

This may not be an activity for the test manager, but in most enterprises there is a concept of getting “sign off”. As I’ve mentioned before on this blog (at least I think I did) there are significant advantages to designing the sign-off process with the management context at hand in mind.

Test results play a role in requesting/achieving sign-off, and that’s why it’s here – even if the project managers choose to assume that role for themselves – as a test manager, you’re involved.

The Checklist

My test plan template for the sub-projects consisted of a cover page (enterprise art provided by the enterprise of course), the list of processes in full mind map glory, and a set of test planning principles to uphold (such as “plan to exercise the risky things first”).

The only other twist is that each leaf node on the mind map has a spot for a “if not now, then when” data point; just in case there were things yet to be discovered.

It’s a thinking tool, I think. And hope.